Door Bij Nader Inzien (redactie)

Omdat niet alle filosofen die werkzaam zijn aan een Nederlands instituut Nederlands spreken/schrijven, publiceert BNI (bij uitzondering) vanaf nu ook Engelse stukken.

– By Sven Nyholm, Assistant Professor of Philosophy and Ethics at the Eindhoven University of Technology –

Here is a thought experiment. A self-driving car is driving along a fairly narrow road, carrying five passengers. Suddenly, a heavy truck veers into the path of the self-driving car. The self-driving car’s internal computer quickly calculates that if it does nothing, there will most likely be a violent collision between it and the truck. As a result, the five passengers are likely to be killed. However, the car also calculates that it can avoid colliding with the truck if it steers right, onto the side-walk. This would save five lives. However, on the side-walk, there is a pedestrian, who would be hit and killed by the self-driving car. For such situations, what should the self-driving car be programmed to do? Should it sacrifice the one to save the five?

The “trolley problem”

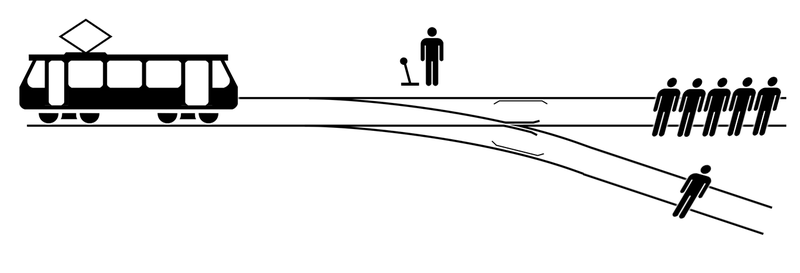

Anybody who has taken a course on moral philosophy is likely to be reminded of the so-called “trolley problem” when they encounter the story above. The trolley problem is the much-discussed thought experiment in which the only way to save five people on some train-tracks is by redirecting the train to a side-track where, unfortunately, one other person is located. In this variation of the case, most people think it’s okay to save the five by sacrificing the one. In a variation of the case, however, most people make a different judgment. In that other variation, saving the five would require pushing a large person off a bridge onto the train-tracks. The large person’s weight is imagined to be substantial enough for the trolleys breaks to be activated. In this case, most people judge it to be wrong to save the five by sacrificing the one. A large philosophical and psychological literature explores the differences in people’s judgments about these two trolley cases, seeking to explain why there is an important moral difference between these two scenarios.

As noted above, the ethical problem of how self-driving cars should be programmed to respond to accident-scenarios has been compared to the trolley problem. In fact, virtually every article – both in academic journals and in the media – that discusses the ethics of “accident-algorithms” for self-driving cars, makes this comparison. But how helpful is it to compare the ethics of how to program self-driving cars to handle crash-scenarios with the trolley problem? If this were a good analogy, this would be good. It would mean that there is a huge literature we can draw on when we approach the thorny issue of the ethics of accident-algorithms for self-driving cars. We could be helped by the extensive literature on the trolley problem. But is it really a good analogy?

My collaborator Jilles Smids and I decided to scrutinize this oft-made analogy between the ethics of accident-algorithms for self-driving cars and the philosophy of the trolley problem. Our conclusion ended up being a skeptical one: we concluded this analogy has important limits. Specifically, we found that there are three important differences between the ethics of self-driving cars and the philosophy of the trolley problem. For this reason, we think that it is best to not get too excited about the prospect about bringing the philosophy and psychology of the trolley problem to bear on the real-world ethical issue of how to program self-driving cars to handle accident-scenarios.

Differences between trolley cases and self-driving cars

The first key difference has to do with the two different decision-making situations we have here. In the trolley problem, the person facing the decision is imagined to be right in the middle of the situation, having to make a split-second decision then and there. In contrast, when we program self-driving cars for how they should react in different potential accident-scenarios, we are engaging in prospective decision-making or contingency-planning about future scenarios that may or may not actually arise. Moreover, in the trolley problem, the person making the decision is supposed to focus only a small set of considerations, leaving everything else aside. In one variation, the person is only supposed to focus on how saving the five would require sacrificing one by redirecting the train. In the other variation, saving the five requires physically pushing a person onto the train-tracks into the path of the trolley. These are the only considerations we are supposed to take into account. In contrast, when we think about how to program self-driving cars to handle accident-scenarios, it would be bizarre to only take some small and limited set of considerations into account. Rather, we should try to take everything that might possibly be relevant into account before we make a decision about how cars should be programmed. And rather than leaving the decision in the hands of just one person as in the trolley examples, the decision about how to program self-driving cars to handle accident-scenarios should be one about which there is a wider discussion involving all the most important stakeholders. In sum, the first important problem with the analogy between the ethics of accident-algorithms for self-driving cars and the philosophy of the trolley problem is that we have two very different kinds of decision-making situations here.

The second important disanalogy has to do with the role of legal and moral responsibility. When philosophy teachers introduce the trolley problems in ethics-courses, it almost always happens that some student will raise a question about the consequences of redirecting the trolley or of pushing the large person onto the track. Will one not be blamed for this, or possibly sent to prison for murder? The way philosophy teachers tend to reply is that we should put questions of legal and moral responsibility aside, and simply focus on the question of what the right decision in this situation would be. In contrast, when it comes to the problem of how to program self-driving cars to handle crash-scenarios involving ethical dilemmas, there is no way we can avoid taking issues of legal and moral responsibility into account. In real life, when any human being is injured or killed, there immediately arises a discussion of whether anybody is to blame for this, and whether there is somebody who should be punished. This is especially true if a human being is injured or killed as a result of decisions made by somebody else. If somebody dies because a self-driving car was programmed to handle accident-scenarios in certain ways, the question of moral and legal responsibility cannot possibly be ignored. So here we have another very important difference between the ethics of accident-algorithms and philosophizing about trolley cases: while it may be defensible to set aside issues of legal and moral responsibility in the latter case, it is not defensible to ignore issues of legal and moral responsibility in the former case.

The third key difference has to do with risks and uncertainty. In discussions of the trolley problem, it is stipulated that the person facing the dilemma knows with certainty what the results of redirecting the train or pushing the large person onto the tracks would be. It is assumed that it is a known and certain fact that if the one is sacrificed, then the five will be saved. The question is what the right decision is in light of the facts. Is this anything like the epistemic situation faced by those reasoning about how to program self-driving cars to react to accident-scenarios? In short, no. The ethics of accident-algorithms for self-driving cars inevitably involves difficult risk-assessments about hypothetical future scenarios about which there can be no certainty. In real life, there are always many variables, not least in traffic, with many different objects moving at high speeds in different types of weather conditions. We cannot be certain how things will play out in the different scenarios that self-driving cars will be in where accidents are unavoidable. Instead, we have to make more or less well-informed predictions about which possible courses of events are more or less likely. We have to make difficult and imprecise assessments of the risks associated with the different possible ways in which self-driving cars could be programmed to react. This constitutes another important difference between this real-world issue and the imagined scenario that is part of the trolley problem thought experiments. This is important because uncertainty and risks pose ethical challenges that do not arise when all the relevant facts are known and fully certain.

It turns out, then, that we should be careful about drawing too close of an analogy between the ethics of how to program self-driving cars to respond to unavoidable crashes and the philosophy and psychology of the trolley problem. Hence for guidance about the ethics of self-driving cars, the literature on the trolley problem is ultimately less helpful than one might initially think.

Read more:

- If you want fuller explanations of the points made in the paragraphs above, check out “The Ethics of Accident-Algorithms for Self-Driving Cars: An Applied Trolley Problem?”, which is available in open access at the website of the philosophy journal Ethical Theory and Moral Practice. [link]

- See also the ‘Incomplete, Chronological, Continuously Updated List of Articles About Murderous Self-Driving Cars and the Trolley Problem’ here.

- Zie ook ‘Zelfrijdende auto’s en het Trolleyprobleem‘ op BNI.